May 19, 2025

AI Knowledge Assistants are becoming essential across industries, from banking to healthcare to enterprise services. While generative AI can answer general knowledge questions well, it can’t answer questions specific to your business without access to your data. That’s where retrieval-augmented generation (RAG) comes in, using structured patterns to ground AI responses in real, business-specific content.

At Lunavi, we’re helping build assistants for internal knowledge support, customer service tied to support tickets and SOPs, banking use cases with customer data access, and even sales assistants powered by past proposals, RFPs, and case studies. But building these tools brings a new set of testing challenges…

AI chatbots are changing the way businesses interact with customers, employees, and internal users. They speed up response times, handle routine inquiries, and improve availability. But they don’t always get it right. A chatbot might misinterpret a question, deliver inconsistent answers, or simply confuse users.

Testing these AI-driven assistants manually is slow, repetitive, and doesn’t scale well. So we asked: Can AI help us test AI?

Manual chatbot testing can be frustrating. You might ask the same question twice and receive two different answers. Some responses are spot-on, while others are vague or misleading. Identifying these inconsistencies by hand is tedious—and often inaccurate.

Unlike traditional software, which follows predefined scripts, AI chatbots generate responses dynamically. That makes them powerful, but also unpredictable. Misunderstandings, incomplete answers, and inconsistent messaging can lead to frustrated users and reputational risk.

How do you validate responses when no two are exactly the same?

Manual review doesn’t cut it. It’s time-consuming and doesn’t scale with growing chatbot usage. That’s where AI testing comes in—bringing structure, scalability, and efficiency to chatbot validation.

At Lunavi, we believe in working smarter. So we developed an AI-powered chatbot testing framework using Playwright—an open-source automation tool. This system ensures chatbot interactions are reliable and high quality, even as they scale.

• Automated Response Validation

Compares chatbot replies to expected outcomes, reducing human error and improving accuracy. This is a great example of using AI to test AI, automating validation for AI-generated responses that aren’t always predictable or consistent.

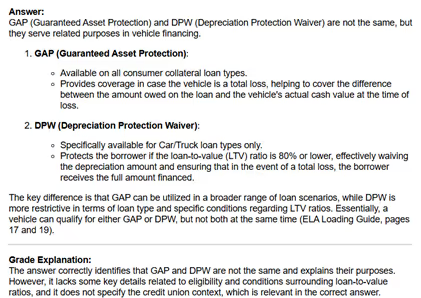

• Flexible Response Scoring

Instead of a simple pass/fail, responses are scored from 1 to 10. A score of 6 or higher is considered a pass. This flexibility was key, especially for AI outputs, where “close enough” might still be acceptable. It allowed us to capture nuance without being overly strict.

• CI/CD Integration

Fits into existing Continuous Integration/Continuous Deployment (CI/CD) workflows for seamless automated testing with every update.

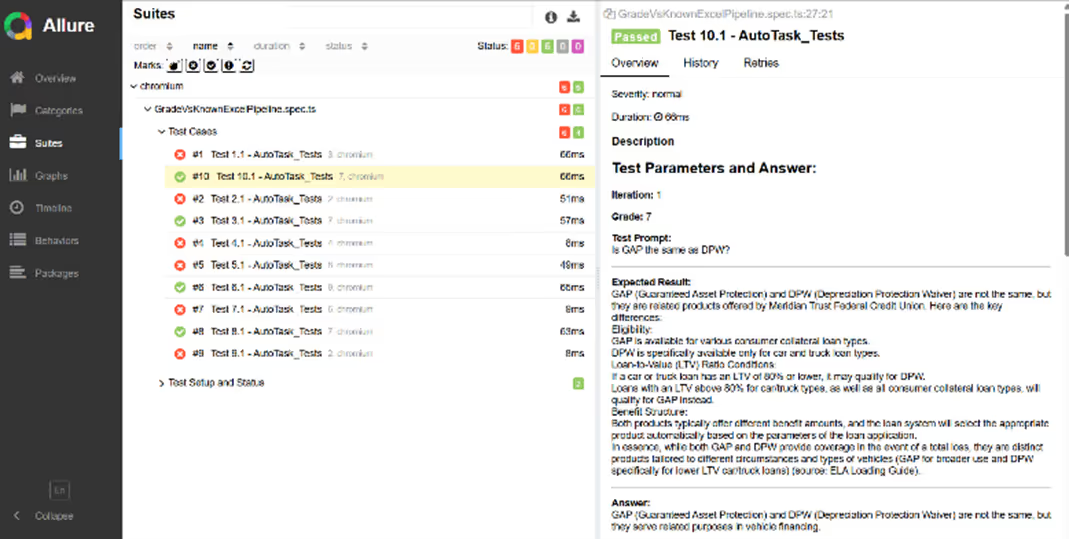

• Allure Reports for Visibility

Allure reports were used to visualize the grading results. It gave us a clean, easy-to-read report where we could spot low-scoring test cases at a glance. This was useful for debugging and iterating quickly.

Clicking into a test shows the full prompt, the AI-generated response, the expected result, and an explanation for how the score was graded.

• No-Code Test Management

Business analysts and QA testers can update test cases through a simple Excel file, no coding required. We don’t need to touch the code. Test prompts and expected results live in an Excel sheet. We simply update that file and re-run the framework. It’s a huge time-saver for QAs.

• Secure Authentication

API tokens ensure secure access to test environments.

• Regression-Aware Testing

When new test scenarios are added or model parameters (like temperature, top_p, or system prompts) change, the framework helps catch regressions in previously working responses—ensuring consistency across versions.

For organizations that depend on chatbots, this kind of automated validation is a game-changer:

• Saves Time and Resources: Reduces the manual workload, so teams can focus on strategic initiatives.

• Improves Customer Experience: Delivers consistent, high-quality responses that reduce user frustration.

• Supports Scalability: Grows with your chatbot usage without sacrificing accuracy or performance.

Even in early-stage testing, the efficiency benefits were clear. Manual validation would have taken roughly 1–2 hours, assuming 3–5 minutes per case to prompt, verify, and log results. With the automated framework, everything was executed and scored in under 30 minutes, roughly 60–75% time saved, while also generating visual reports for faster review.

Unlike some testing tools that focus only on validating LLM prompts in isolation, our framework tests the full production stack, from the application layer to the chatbot logic and all the way through to the LLM. This helps ensure that what works in testing also works live, in the hands of real users.

• AI Testing Needs Flexibility

Expecting perfect matches on AI output is unrealistic. The scoring system helped us embrace the “gray area” where a response might still be acceptable, even if it wasn’t word-for-word.

• Data Quality in the Excel Sheet Matters

The effectiveness of the tests heavily depends on the quality of prompts and expected outcomes in the Excel. We revisited our test cases often and tweaked them based on actual chatbot behavior.

• Keep Iterating Based on Results

The framework gave us insight, but I still needed to pair that with human review. When the bot consistently scored low on a certain prompt, it pushed us to rethink how the intent was trained.

• Easy Maintenance & Smooth Workflow

Because the framework was Excel-based and didn’t require any coding, I was able to handle all the testing myself. That made it easy to iterate quickly, update test cases as needed, and keep the testing process moving without needing constant developer support. It was lightweight, low-maintenance, and fit perfectly into our agile workflow.

• Scoring for Non-Deterministic Outputs

AI doesn’t always respond with the exact same wording — which makes traditional assertions like "Are Equal" ineffective. The scoring system (1–10 scale) helped account for acceptable variation in responses, making it a better fit for testing LLM-powered assistants.

• Handling AI Overreach

While AI can surprise us by solving use cases we didn’t expect, it can also generate responses outside intended scope. In this project, we did not encounter any such unintended use cases, but the framework allows for creating test scenarios where the assistant should recognize limits and respond accordingly, e.g., “I’m not able to help with that.”

At Lunavi, we’re always looking ahead. This framework uses AI to test AI—bringing structure to validating non-deterministic outputs and ensuring chatbot quality at scale. While we don’t offer this framework as a standalone product, we use and provide it as part of every AI solution we deliver for our clients.

One of the key concerns businesses have when deploying AI is the fear that it may act outside its intended boundaries, potentially giving incorrect or risky answers. Our framework helps reduce that risk by validating how the assistant responds across known and edge-case scenarios.

Wherever you are in your AI journey, whether you're exploring ideas, building your first solution, or validating quality before launch, we’d love to help. Let’s connect and see how we can support your next step.