October 7, 2025

For software delivery or developers, the promise of AI is clear: faster and more consistent code. So is the challenge of proving its value. As organizations explore AI-powered tools for software delivery, the key question shifts from “Can we use AI?” to “How do we measure productivity gains?” That’s where an AI maturity framework can help.

When a team decides to incorporate AI into its projects, a common question quickly arises: “How much time are you actually saving by using AI?” Measuring efficiency improvements does present a real challenge. Ideally, you would repeat the same task twice—once using traditional methods and then again with AI assistance. However, to ensure unbiased results, the second attempt should be assigned to a different individual, preventing any influence or learning from the first round.

This approach, while methodologically sound, is not practical from a budget standpoint. For example, in the context of Test Case Design, repeating the activity means having one person complete the task traditionally, while another performs the same task using AI tools. This ensures fairness and accuracy in measuring AI’s impact but potentially doubles the resource requirements for a single activity, making it a less feasible option for most teams.

Other activities, such as requirements elicitation, can also benefit from AI integration. However, measuring effectiveness in these cases is even more complex, as it requires the involvement of multiple resources, including stakeholders. The point here is, deciding HOW you will measure AI’s effectiveness is in a way, a step towards becoming more mature in the use of AI.

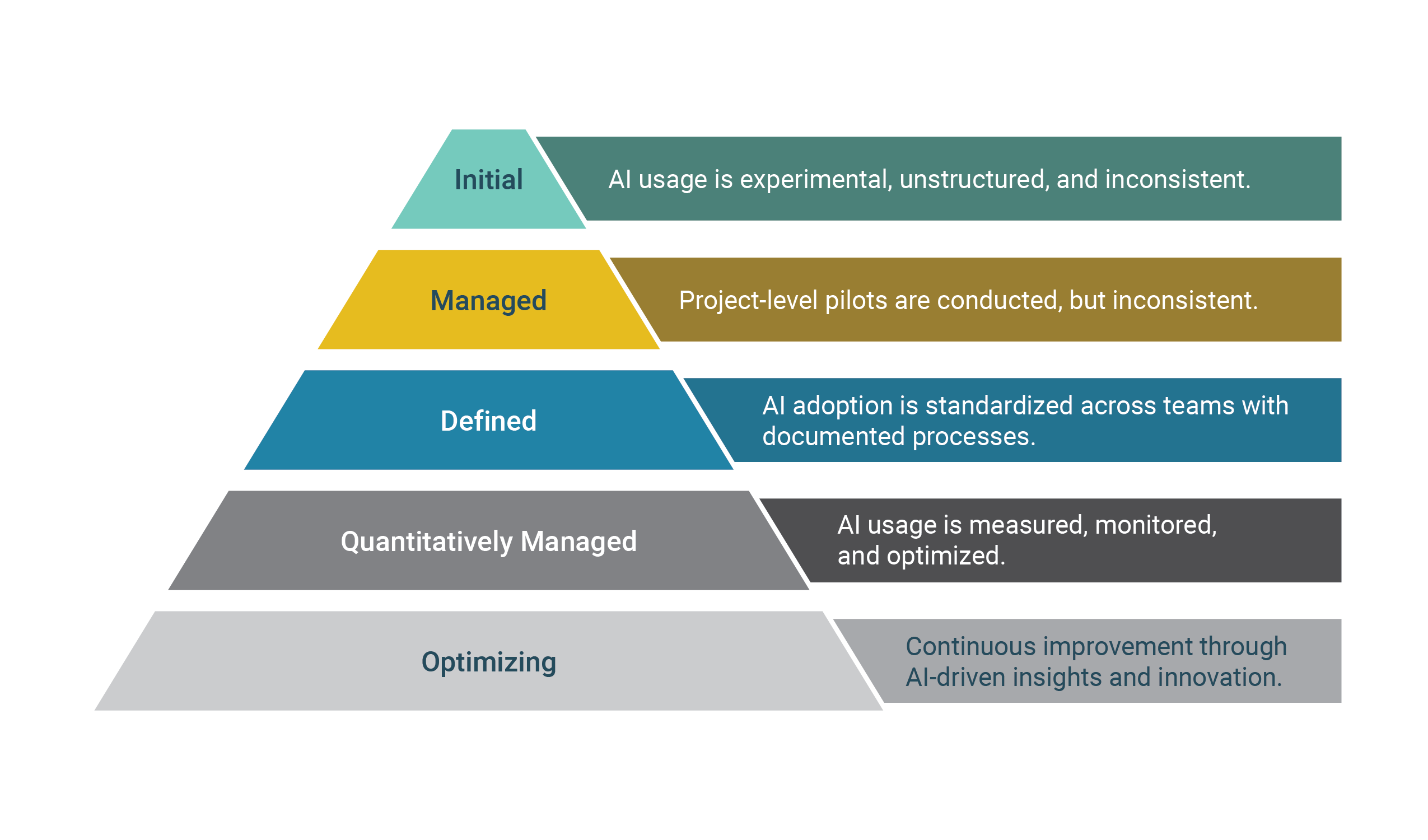

Our objective is to enhance the use of AI in software delivery, ensuring it leads to greater efficiency and effectiveness. To achieve this, we recognized the need for a methodology that enables us to assess both our current state and our desired future progress. We therefore adopted an industry-standard maturity model that defines five levels of AI integration.

This Maturity Model consists of the following:

At the initial stages, most team’s application of AI tends to be inconsistent. For example, some teams may pilot meeting transcription or automate test case design, but without any formal measurement of outcomes. As AI maturity advances, these practices become standardized, measured, and ultimately drive continuous improvement throughout the software development lifecycle.

Teams should concentrate on activities where improvements can be quantified, such as requirements analysis, test design and automation. It is important to determine in advance how these activities will be measured—options include conducting surveys or executing activities in parallel. By comparing the efficiency of manual processes against those assisted by AI, teams can identify where AI provides value, and just as important, where it doesn’t. This insight is necessary for achieving sustainability, optimization, and maturity in AI adoption.

To effectively implement this model, start by reviewing current operations and selecting pilot activities that are likely to demonstrate measurable benefits from AI integration. Define specific maturity levels for each activity and incorporate mechanisms to collect feedback and monitor progress. We have found it’s most effective to work with teams that are ready for AI adoption and can accommodate minor interruptions necessary for measurement. This approach helps teams move beyond isolated AI applications to a state of ongoing improvement, where AI systematically enhances productivity and quality across software delivery.

By sharing this approach, we aim to help IT professionals navigate the journey from AI experimentation to measurable, sustainable value in custom software delivery.