November 5, 2025

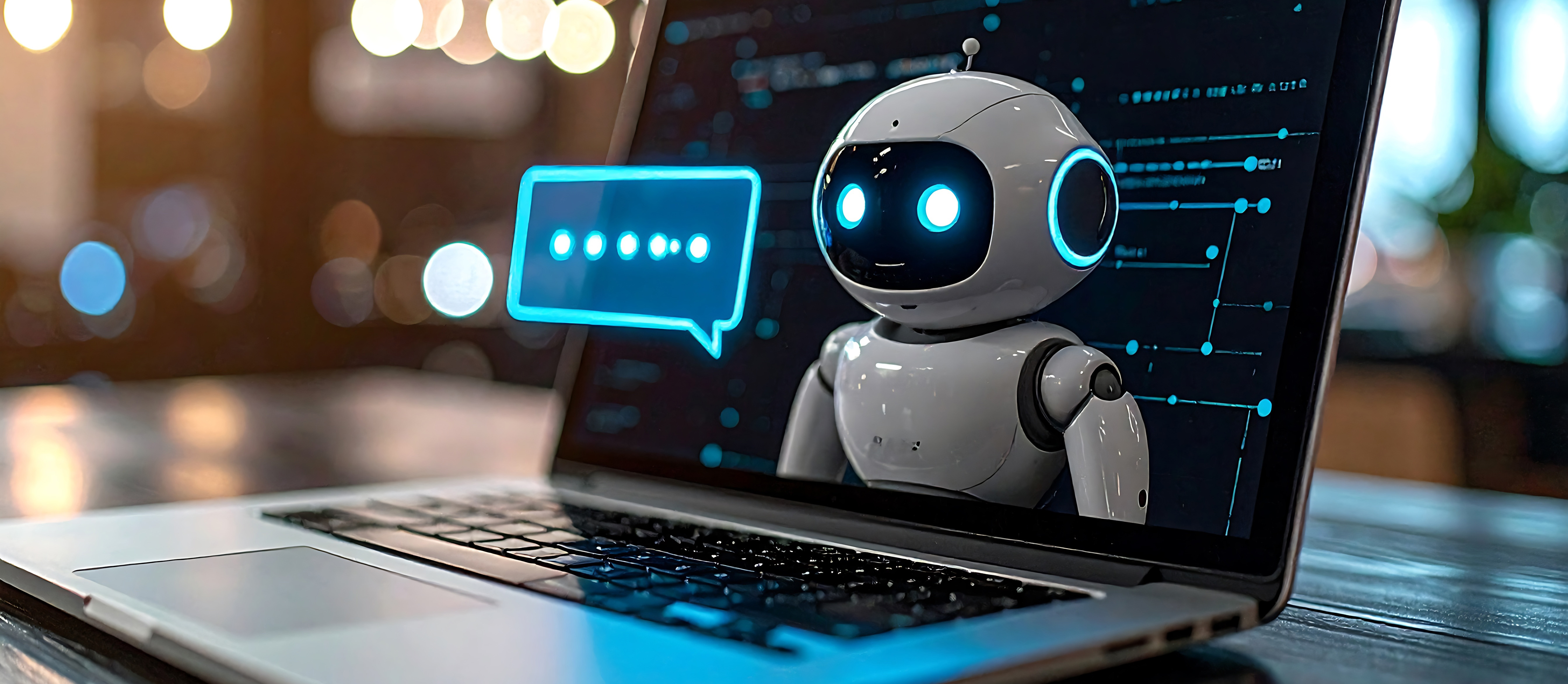

AI assistants are being integrated into apps across industries. It feels like they are everywhere. But building an assistant that can actually deliver results is far more difficult than it sounds.

At Lunavi, we’ve built and deployed multiple AI KnowledgeAssistants designed to help clients access industries. Through extensive testing and refinement, we’ve learned what separates an impressive demo from a dependable business tool.

While some see LLMs or assistants as magic, they aren’t. They’re engineered systems that rely on data quality, cost awareness, testing discipline, and user feedback.

An AI Knowledge Assistant combines a Large Language Model (LLM)—such as GPT-4 or GPT-5—with company data sources to provide accurate, conversational answers.

Here’s how it works:

In short: User → Prompt → LLM + RAG → Response →Feedback.

A Knowledge Assistant helps get clear, accurate answers from company data without digging through documents or systems.

Every reliable assistant starts with reliable data. Yet many projects stumble here.

Common data issues include:

How to address them:

If users can’t find an answer, it’s often a data problem rather than a model problem.

Unlike traditional software, AI outputs are non-deterministic—the same question may produce slightly different answers each time. This makes string-based unit testing ineffective.

We use another LLM to grade responses for accuracy, completeness, relevance, and precision. Each answer receives a numerical score using a structured grading prompt.

These automated tests run in CI/CD pipelines (such asPlaywright + Allure in Azure DevOps) and store full meta data—from model version to temperature settings—for reproducibility.

Across our experience building and maintaining Knowledge Assistants, five principles stand out:

Design for constraints.

Token and cost limits define your architecture.

Test constantly.

Automated, AI-based testing keeps accuracy high.

Close the feedback loop.

Real user input drives relevance and improvement.

Keep data fresh.

Regular updates sustain accuracy and trust.

Log everything.

Prompts, models, and results fuel future fine-tuning.

Knowledge Assistants are never truly finished. Their effectiveness depends on continuous refinement based on data and user interaction.

AI is powerful, but its value depends on how responsibly and thoughtfully it’s implemented. Governance, iteration, and empathy matter as much as model selection.

At Lunavi, our mission is to help organizations build intelligent systems that solve real problems, stay accurate, and earn user confidence.

An effective AI Knowledge Assistant is measured by how reliably it helps people find the right information—not by how sophisticated it sounds.